(April 26 2025) What a great show! Here’s the video:

Hey there Aaron Swartz Day community!

Hey there Aaron Swartz Day community!

We are over here just trying to be interesting and useful ^_^

Sorry it’s taken so long for our next podcast! Life events made us delay it a couple times, but we should be all good for this Saturday, April 26th at 2pm PST/9pm UTC.

Information about our April 26th podcast:

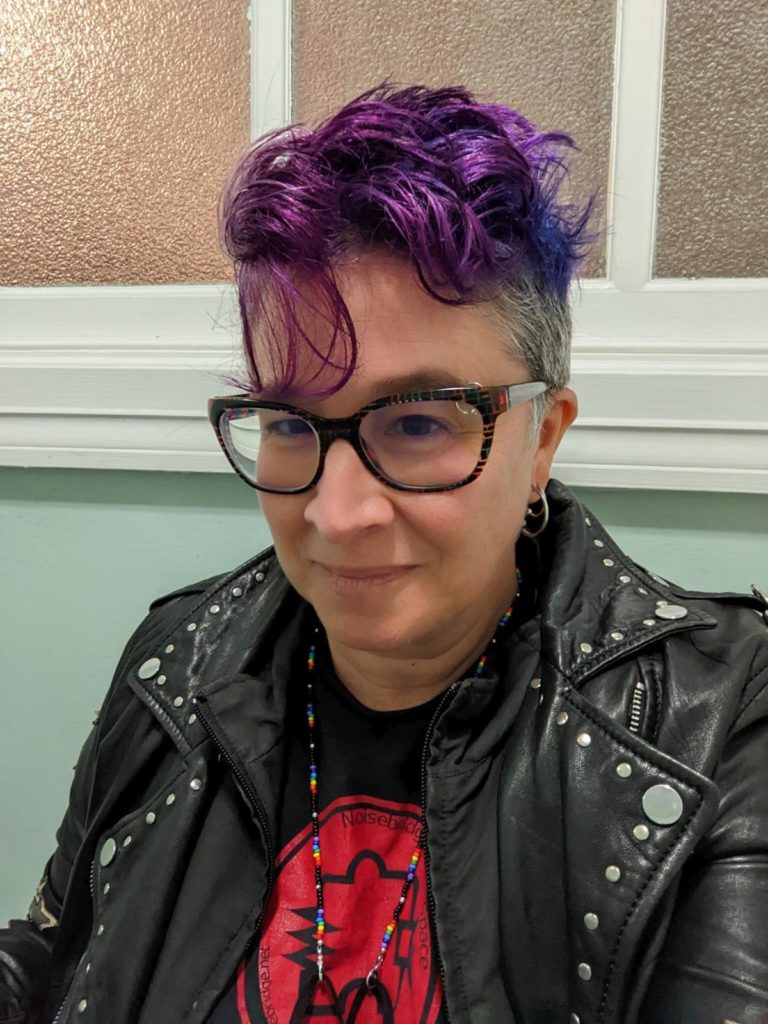

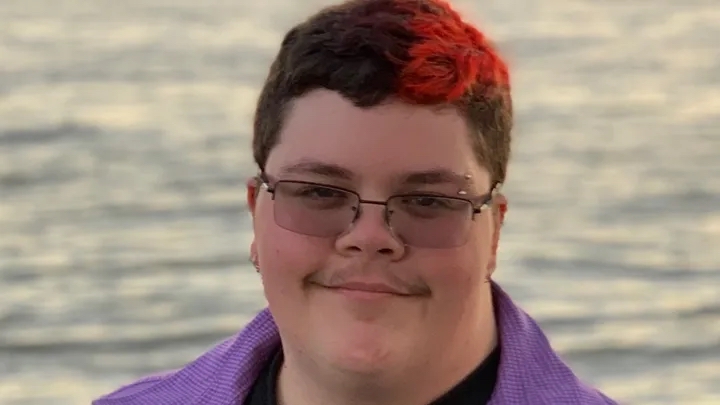

Gavin Grimm will be giving us an update on the many sordid operations of the recent administration and the various ways that people have managed to push back on these policies.

From Gavin:

The attack on migrants continues with the Trump administration at the helm of more ICE raids which are being done more aggressively and with less oversight than during the Biden administration. ICE has been reported attempting to enter churches, hospitals and even elementary schools. In many cases they have been successful. In some cases however, people have fought back. A sanctuary church in New York City refused entry to ICE. As did an elementary school in LA. However the attacks only continue to intensify, with the state department revoking at least 300 student visas as well as the IRS choosing to share tax data with immigration authorities.

As a result, people have been vocalizing their outrage. In early April, over 1,400 protests took place across all 50 states, called the hands-off protests which were pro-democracy protests held to express people’s discontent with the ruling forces of Elon Musk and Donald Trump, along with the rest of the cabinet. More actions are still planned for the future, with hands-off organizers suggesting that the total number of attendees across the nation and in cities like Paris and London could number in the millions.

Finally, despite the accelerated attacks on trans people socially as well as trans rights legally, there are people fighting back. Since late December when the Gender Liberation Movement staged a sit-in at the Capitol hill bathroom near Speaker Mike Johnsons office, people have continued to push back against tbe Trump administrations brazen attack on transgender people and their rights. Many organizations have identified the increased risk that trans people face in this moment and have chosen to speak out, including the chicago teachers union. In addition, legal challenges are still going ahead, like the federal lawsuit brought by 7 transgender and non binary individuals against the trump administration for refusing to issue passports that reflect the gender that is consistent with a transgender person’s identity, instead issuing documents based on sex at birth.