From September 13, 2017

By Chelsea Manning

In recent years our military, law enforcement and intelligence agencies have merged in unexpected ways. They harvest more data than they can possibly manage, and wade through the quantifiable world side by side in vast, usually windowless buildings called fusion centers.

Such powerful new relationships have created a foundation for, and have breathed life into, a vast police and surveillance state. Advanced algorithms have made this possible on an unprecedented level. Relatively minor infractions, or “microcrimes,” can now be policed aggressively. And with national databases shared among governments and corporations, these minor incidents can follow you forever, even if the information is incorrect or lacking context…

In literature and pop culture, concepts such as “thoughtcrime” and “precrime” have emerged out of dystopian fiction. They are used to restrict and punish anyone who is flagged by automated systems as a potential criminal or threat, even if a crime has yet to be committed. But this science fiction trope is quickly becoming reality. Predictive policing algorithms are already being used to create automated heat maps of future crimes, and like the “manual” policing that came before them, they overwhelmingly target poor and minority neighborhoods.

The world has become like an eerily banal dystopian novel. Things look the same on the surface, but they are not. With no apparent boundaries on how algorithms can use and abuse the data that’s being collected about us, the potential for it to control our lives is ever-growing.

*** full text below for archival purposes***

By Chelsea Manning

For seven years, I didn’t exist.

While incarcerated, I had no bank statements, no bills, no credit history. In our interconnected world of big data, I appeared to be no different than a deceased person. After I was released, that lack of information about me created a host of problems, from difficulty accessing bank accounts to trouble getting a driver’s license and renting an apartment.

In 2010, the iPhone was only three years old, and many people still didn’t see smartphones as the indispensable digital appendages they are today. Seven years later, virtually everything we do causes us to bleed digital information, putting us at the mercy of invisible algorithms that threaten to consume our freedom.

Information leakage can seem innocuous in some respects. After all, why worry when we have nothing to hide?

We file our taxes. We make phone calls. We send emails. Tax records are used to keep us honest. We agree to broadcast our location so we can check the weather on our smartphones. Records of our calls, texts and physical movements are filed away alongside our billing information. Perhaps that data is analyzed more covertly to make sure that we’re not terrorists — but only in the interest of national security, we’re assured.

Our faces and voices are recorded by surveillance cameras and other internet-connected sensors, some of which we now willingly put inside our homes. Every time we load a news article or page on a social media site, we expose ourselves to tracking code, allowing hundreds of unknown entities to monitor our shopping and online browsing habits. We agree to cryptic terms-of-service agreements that obscure the true nature and scope of these transactions.

According to a 2015 study from the Pew Research Center, 91 percent of American adults believe they’ve lost control over how their personal information is collected and used.

Just how much they’ve lost, however, is more than they likely suspect.

The real power of mass data collection lies in the hand-tailored algorithms capable of sifting, sorting and identifying patterns within the data itself. When enough information is collected over time, governments and corporations can use or abuse those patterns to predict future human behavior. Our data establishes a “pattern of life” from seemingly harmless digital residue like cellphone tower pings, credit card transactions and web browsing histories.

The consequences of our being subjected to constant algorithmic scrutiny are often unclear. For instance, artificial intelligence — Silicon Valley’s catchall term for deepthinking and deep-learning algorithms — is touted by tech companies as a path to the high-tech conveniences of the so-called internet of things. This includes digital home assistants, connected appliances and self-driving cars.

Simultaneously, algorithms are already analyzing social media habits, determining creditworthiness, deciding which job candidates get called in for an interview and judging whether criminal defendants should be released on bail. Other machine-learning systems use automated facial analysis to detect and track emotions, or claim the ability to predict whether someone will become a criminal based only on their facial features.

These systems leave no room for humanity, yet they define our daily lives. When I began rebuilding my life this summer, I painfully discovered that they have no time for people who have fallen off the grid — such nuance eludes them. I came out publicly as transgender and began hormone replacement therapy while in prison. When I was released, however, there was no quantifiable history of me existing as a trans woman. Credit and background checks automatically assumed I was committing fraud. My bank accounts were still under my old name, which legally no longer existed. For months I had to carry around a large folder containing my old ID and a copy of the court order declaring my name change. Even then, human clerks and bank tellers would sometimes see the discrepancy, shrug and say “the computer says no” while denying me access to my accounts.

Such programmatic, machine-driven thinking has become especially dangerous in the hands of governments and the police.

In recent years our military, law enforcement and intelligence agencies have merged in unexpected ways. They harvest more data than they can possibly manage, and wade through the quantifiable world side by side in vast, usually windowless buildings called fusion centers.

Such powerful new relationships have created a foundation for, and have breathed life into, a vast police and surveillance state. Advanced algorithms have made this possible on an unprecedented level. Relatively minor infractions, or “microcrimes,” can now be policed aggressively. And with national databases shared among governments and corporations, these minor incidents can follow you forever, even if the information is incorrect or lacking context.

At the same time, the United States military uses the metadata of countless communications for drone attacks, using pings emitted from cellphones to track and eliminate targets.

In literature and pop culture, concepts such as “thoughtcrime” and “precrime” have emerged out of dystopian fiction. They are used to restrict and punish anyone who is flagged by automated systems as a potential criminal or threat, even if a crime has yet to be committed. But this science fiction trope is quickly becoming reality. Predictive policing algorithms are already being used to create automated heat maps of future crimes, and like the “manual” policing that came before them, they overwhelmingly target poor and minority neighborhoods.

The world has become like an eerily banal dystopian novel. Things look the same on the surface, but they are not. With no apparent boundaries on how algorithms can use and abuse the data that’s being collected about us, the potential for it to control our lives is ever-growing.

Our drivers’ licenses, our keys, our debit and credit cards are all important parts of our lives. Even our social media accounts could soon become crucial components of being fully functional members of society. Now that we live in this world, we must figure out how to maintain our connection with society without surrendering to automated processes that we can neither see nor control.

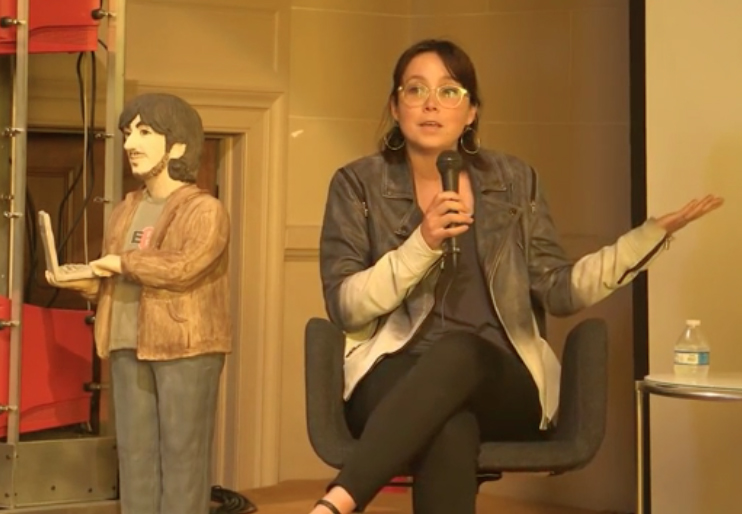

Caroline Sinders

Caroline Sinders