TICKETS

The Internet Archive is hosting the Fifth Annual Aaron Swartz Day International Hackathon and Evening Event:

The Internet Archive is hosting the Fifth Annual Aaron Swartz Day International Hackathon and Evening Event:

Location: Internet Archive, 300 Funston Ave, San Francisco, CA 94118

November 4, 2017, from 6:00-7:00 (Reception) 7:30pm – 9:30 pm (Speakers)

The purpose of the evening event, as always, is to inspire direct action toward improving the world. Everyone has been asked to speak about whatever they feel is most important.

The event will take place following this year’s San Francisco Aaron Swartz International Hackathon, which is going on Saturday, November 4, from 10-6 and Sunday, November 5, from 11am-6pm at the Internet Archive.

Hackathon Reception: 6:00pm-7:00pm – (A paid ticket for the evening event also gets you in to the Hackathon Reception.)

Come talk to the speakers and the rest of the Aaron Swartz Day community, and join us in celebrating many incredible things that we’ve accomplished by this year! (Although there is still much work to be done.)

We will toast to the launch of the Pursuance Project (an open source, end-to-end encrypted Project Management suite, envisioned by Barrett Brown and brought to life by Steve Phillips).

Migrate your way upstairs: 7:00-7:30pm – The speakers are starting early, at 7:30pm this year – and we are also providing a stretch break at 8:15pm – and for those to come in that might have arrived late.

Speakers upstairs begin at 7:30 pm.

Speakers in reverse order:

Chelsea Manning (Network Security Expert, Former Intelligence Analyst)

Lisa Rein (Chelsea Manning’s Archivist, Co-founder Creative Commons, Co-founder Aaron Swartz Day)

Daniel Rigmaiden (Transparency Advocate)

Barrett Brown (Journalist, Activist, Founder of the Pursuance Project) (via SKYPE)

Jason Leopold (Senior Investigative Reporter, Buzzfeed News)

Jennifer Helsby (Lead Developer, SecureDrop, Freedom of the Press Foundation)

Cindy Cohn (Executive Director, Electronic Frontier Foundation)

Gabriella Coleman (Hacker Anthropologist, Author, Researcher, Educator)

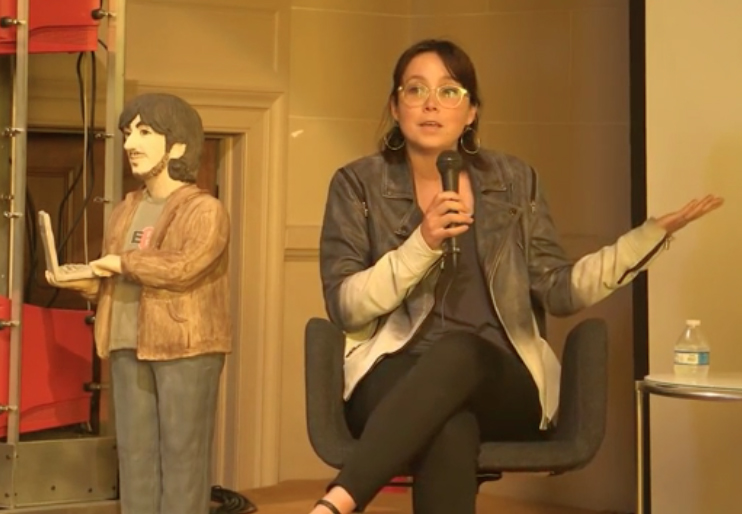

Caroline Sinders (Designer/Researcher, Wikimedia Foundation, Creative Dissent Fellow, YBCA)

Brewster Kahle (Co-founder and Digital Librarian, Internet Archive, Co-founder Aaron Swartz Day)

Steve Phillips (Project Manager, Pursuance)

Mek Karpeles (Citizen of the World, Internet Archive)

Brenton Cheng (Senior Engineer, Open Library, Internet Archive)

TICKETS

About the Speakers (speaker bios are at the bottom of this invite):

Chelsea Manning – Network Security Expert, Transparency Advocate

Chelsea E. Manning is a network security expert, whistleblower, and former U.S. Army intelligence analyst. While serving 7 years of an unprecedented 35 year sentence for a high-profile leak of government documents, she became a prominent and vocal advocate for government transparency and transgender rights, both on Twitter and through her op-ed columns for The Guardian and The New York Times. She currently lives in the Washington, D.C. area, where she writes about technology, artificial intelligence, and human rights.

Lisa Rein – Chelsea Manning’s Archivist, Co-founder, Aaron Swartz Day & Creative Commons

Lisa Rein is Chelsea Manning’s archivist, and ran her @xychelsea Twitter account from December 2015 – May 2017. She is a co-founder of Creative Commons, where she worked with Aaron Swartz on its technical specification, when he was only 15. She is a writer, musician and technology consultant, and lectures for San Francisco State University’s BECA department. Lisa is the Digital Librarian for the Dr. Timothy Leary Futique Trust.

Daniel Rigmaiden – Transparency Advocate

Daniel Rigmaiden became a government transparency advocate after U.S. law enforcement used a secret cell phone surveillance device to locate him inside his home. The device, often called a “Stingray,” simulates a cell tower and tricks cell phones into connecting to a law enforcement controlled cellular network used to identify, locate, and sometimes collect the communications content of cell phone users. Before Rigmaiden brought Stingrays into the public spotlight in 2011, law enforcement concealed use of the device from judges, defense attorneys and defendants, and would typically not obtain a proper warrant before deploying the device.

Barrett Brown – Journalist, Activist, and Founder of the Pursuance Project

Barrett Brown is a writer and anarchist activist. His work has appeared in Vanity Fair, the Guardian, The Intercept, Huffington Post, New York Press, Skeptic, The Daily Beast, al-Jazeera, and dozens of other outlets. In 2009 he founded Project PM, a distributed think-tank, which was later re-purposed to oversee a crowd-sourced investigation into the private espionage industry and the intelligence community at large via e-mails stolen from federal contractors and other sources. In 2011 and 2012 he worked with Anonymous on campaigns involving the Tunisian revolution, government misconduct, and other issues. In mid-2012 he was arrested and later sentenced to four years in federal prison on charges stemming from his investigations and work with Anonymous. While imprisoned, he won the National Magazine Award for his column, The Barrett Brown Review of Arts and Letters and Prison. Upon his release, in late 2016, he began work on the Pursuance System, a platform for mass civic engagement and coordinated opposition. His third book, a memoir/manifesto, will be released in 2018 by Farrar, Strauss, and Giroux.

Jason Leopold, Senior Investigative Reporter, Buzzfeed News

Jason Leopold is an Emmy-nominated investigative reporter on the BuzzFeed News Investigative Team. Leopold’s reporting and aggressive use of the Freedom of Information Act has been profiled by dozens of media outlets, including a 2015 front-page story in The New York Times. Politico referred to Leopold in 2015 as “perhaps the most prolific Freedom of Information requester.” That year, Leopold, dubbed a ‘FOIA terrorist’ by the US government testified before Congress about FOIA (PDF) (Video). In 2016, Leopold was awarded the FOI award from Investigative Reporters & Editors and was inducted into the National Freedom of Information Hall of Fame by the Newseum Institute and the First Amendment Center.

Jennifer Helsby, Lead Developer, SecureDrop (Freedom of the Press Foundation)

Jennifer is Lead Developer of SecureDrop. Prior to joining FPF, she was a postdoctoral researcher at the Center for Data Science and Public Policy at the University of Chicago, where she worked on applying machine learning methods to problems in public policy. Jennifer is also the CTO and co-founder of Lucy Parsons Labs, a non-profit that focuses on police accountability and surveillance oversight. In a former life, she studied the large scale structure of the universe, and received her Ph.D. in astrophysics from the University of Chicago in 2015.

Cindy Cohn – Executive Director, Electronic Frontier Foundation (EFF)

Cindy Cohn is the Executive Director of the Electronic Frontier Foundation. From 2000-2015 she served as EFF’s Legal Director as well as its General Counsel.The National Law Journal named Ms. Cohn one of 100 most influential lawyers in America in 2013, noting: “[I]f Big Brother is watching, he better look out for Cindy Cohn.”

Gabriella Coleman – Hacker Anthropologist, Author, Researcher, Educator

Gabriella (Biella) Coleman holds the Wolfe Chair in Scientific and Technological Literacy at McGill University. Trained as an anthropologist, her scholarship explores the politics and cultures of hacking, with a focus on the sociopolitical implications of the free software movement and the digital protest ensemble Anonymous. She has authored two books, Coding Freedom: The Ethics and Aesthetics of Hacking (Princeton University Press, 2012) and Hacker, Hoaxer, Whistleblower, Spy: The Many Faces of Anonymous (Verso, 2014).

Caroline Sinders – Researcher/Designer, Wikimedia Foundation

Caroline Sinders is a machine learning designer/user researcher, artist. For the past few years, she has been focusing on the intersections of natural language processing, artificial intelligence, abuse, online harassment and politics in digital, conversational spaces. Caroline is a designer and researcher at the Wikimedia Foundation, and a Creative Dissent fellow with YBCA. She holds a masters from New York University’s Interactive Telecommunications Program from New York University.

Brewster Kahle, Founder & Digital Librarian, Internet Archive

Brewster Kahle has spent his career intent on a singular focus: providing Universal Access to All Knowledge. He is the founder and Digital Librarian of the Internet Archive, which now preserves 20 petabytes of data – the books, Web pages, music, television, and software of our cultural heritage, working with more than 400 library and university partners to create a digital library, accessible to all.

Steve Phillips, Project Manager, Pursuance Project

Steve Phillips is a programmer, philosopher, and cypherpunk, and is currently the Project Manager of Barrett Brown’s Pursuance Project. In 2010, after double-majoring in mathematics and philosophy at UC Santa Barbara, Steve co-founded Santa Barbara Hackerspace. In 2012, in response to his concerns over rumored mass surveillance, he created his first secure application, Cloakcast. And in 2015, he spoke at the DEF CON hacker conference, where he presented CrypTag. Steve has written over 1,000,000 words of philosophy culminating in a new philosophical methodology, Executable Philosophy.

Mek Karpeles, Citizen of the World, Internet Archive

Mek is a citizen of the world at the Internet Archive. His life mission is to organize a living map of the world’s knowledge. With it, he aspires to empower every person to overcome oppression, find and create opportunity, and reach their fullest potential to do good. Mek’s favorite media includes non-fiction books and academic journals — tools to educate the future — which he proudly helps make available through his work on Open Library.

Brenton Cheng, Senior Engineer, Open Library, Internet Archive

Brenton Cheng is a technology-wielding explorer, inventor, and systems thinker. He spearheads the technical and product development of Open Library and the user-facing Archive.org website. He is also an adjunct professor in the Performing Arts & Social Justice department at University of San Francisco.

TICKETS

For more information, contact:

Lisa Rein, Co-founder, Aaron Swartz Day

lisa@lisarein.com

http://www.aaronswartzday.org

Caroline Sinders

Caroline Sinders The Internet Archive is hosting the Fifth Annual Aaron Swartz Day International Hackathon and Evening Event:

The Internet Archive is hosting the Fifth Annual Aaron Swartz Day International Hackathon and Evening Event: